Or knowing the minimum amount so that you can build something…

Just hearing “Machine Learning”, “Neural Networks”, etc can be exhausting and honestly incredibly confusing to understand, but does it have to be?

In some respects, it will have to be, as with learning any new subject there is core knowledge that is needed and with ML it is quite steep.

Uber has recently released Ludwig which is “a toolbox built on top of TensorFlow that allows to train and test deep learning models without the need to write code.”

Fantastic! Now I don’t need to learn to code to produce initial models!

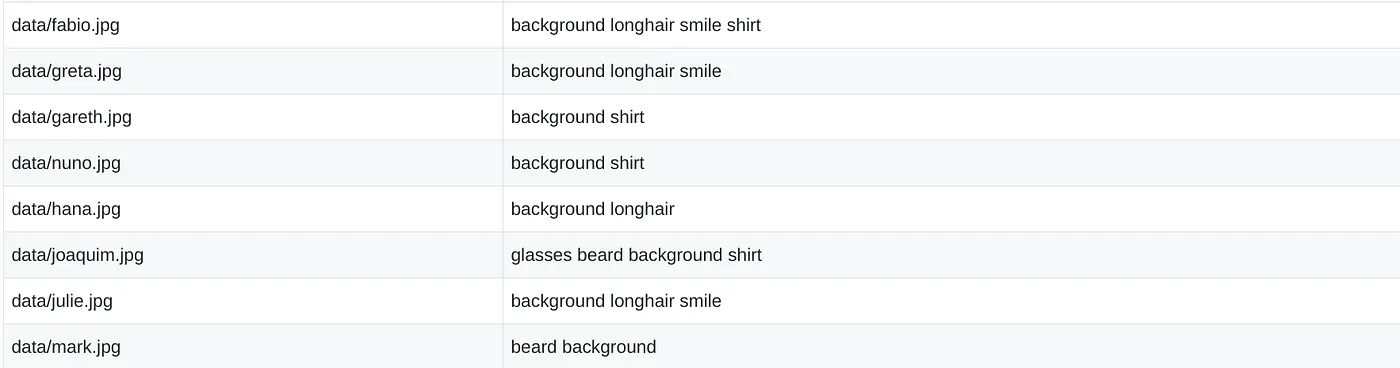

YLD has just released a new website (YLD.io - check it out if you haven’t) which required some of our staff to have their photos taken. After looking at these pictures for the first time, I noticed that they all had the same background, I then thought it would be fun to perform a simple multi-label classification on these pictures! 👩🔬

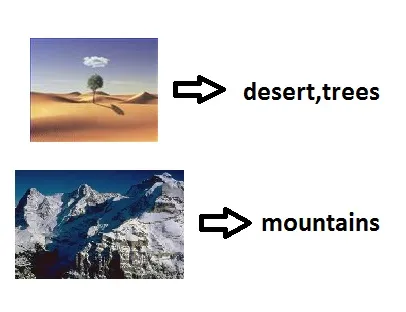

What is Multi-Label classification?

In essence, it is multiple textual tags applied to an input, in our case we are using an image as an input.

Because we are using “supervised learning” we need to create our own tags from images, thus supervising the algorithm. If we managed to make something that could create the words and image associations without providing hints, then this will be called “unsupervised learning”.

What this means for me is that I had to manually perform a data entry task on manually adding all of the tags that I thought were correct from the image locations I had in a CSV.

These images looked all fairly similar to the picture of me, note that I was not actually in the training set.

Once this is sorted, the next step is to “build” a machine learning algorithm. Now I know, this may seem difficult, but with ludwig — it is configuration via a YAML file.

As you can see the input_features key matches up 1–2–1 with our CSV file’s first columnimage_path, and the output_features matches up with our set of tagsin our CSV file.

The training key is used to tune the training of the dataset, here I have just increased the number of epochs (times to run) and the learning_rate, which is the step size of the algorithm.

So now we are ready to train, this can take some time, so grab a coffee.

Once training has completed, ludwig would’ve created a new folder named “results”, this is where the generated model and parameters are stored. But how do we get values out of this?

This will then generate a new results_0 folder with some new CSV files, which holds the predicted values! For us we want to look at the tags_predictions.csv file.

Hurrah! After running my predictions we cancat that file and see the predicted tags:

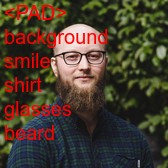

Now if we wanted to use the input image and overlay the text we can write a small snippet of code to generate something combining the two, and you will get something like this picture of me!

There are loads of other examples which can easily be used. If you are struggling to figure out something to apply this to, or if you don’t have enough data you can use https://www.kaggle.com/c/titanic as a dataset.